gRPC Networking: The Best Remote Call for Modern Distributed Systems

As applications grow in scale and complexity, efficient inter-service communication becomes critical. In our last post, “Virtual Private Clouds and Layered Traffic Workflows”, we introduced gRPC as a remote call commonly used in microservice architectures. gRPC has emerged as a leading remote procedure call (RPC) framework designed to meet the performance, scalability, and developer-productivity needs of modern distributed systems. In this article we explain what gRPC is, why it’s needed, how it works, its role in microservices and other architectures, and how it compares with alternative approaches.

What is gRPC?

gRPC (gRPC Remote Procedure Calls) is an open-source high-performance RPC framework originally created by Google. It allows clients and servers to communicate by defining service interfaces and message payloads using Protocol Buffers (protobuf). gRPC generates client and server code in multiple languages from those definitions, enabling strongly typed, cross-language RPCs.

Key Characteristics:

Uses HTTP/2 as the transport protocol

Uses Protocol Buffers (a compact binary serialization format) by default

Supports synchronous and asynchronous streaming (unary, server streaming, client streaming, bidirectional streaming)

First-class support for code generation across many languages

Built-in features for deadlines, cancellations, authentication, and load balancing

Why gRPC is Needed

Modern application architectures — microservices, polyglot environments, real-time systems, and edge/cloud-native deployments — place new demands on inter-service communication. gRPC addresses those demands in several ways:

Performance and efficiency

Binary serialization (Protocol Buffers) produces much smaller payloads than typical JSON over HTTP, reducing bandwidth and serialization/deserialization overhead.

HTTP/2 multiplexing and header compression reduce latency and eliminate head-of-line blocking per TCP connection, enabling many concurrent RPCs over a single connection.

gRPC supports streaming that avoids repeated connection setups and enables efficient large-data transfer and real-time flows.

Strongly typed APIs and code generation

Protobuf interface definitions create a contract; client and server stubs are generated to reduce boilerplate and runtime errors.

Compile-time type checking and generated models speed development and reduce integration bugs.

Streaming and real-time patterns

Native streaming primitives (client, server, bidirectional) make real-time and event-driven workloads straightforward (e.g., telemetry, chat, IoT).

Interoperability in polyglot environments

gRPC supports many languages (Go, Java, C#, Python, C++, Node.js, Ruby, and more), making it easier to integrate services written in different stacks.

Operational features

Built-in support for deadlines/timeouts, cancellation, and metadata (headers) helps build resilient services.

Integration points for authentication (TLS/mTLS, token-based auth), tracing, and observability.

How gRPC Works

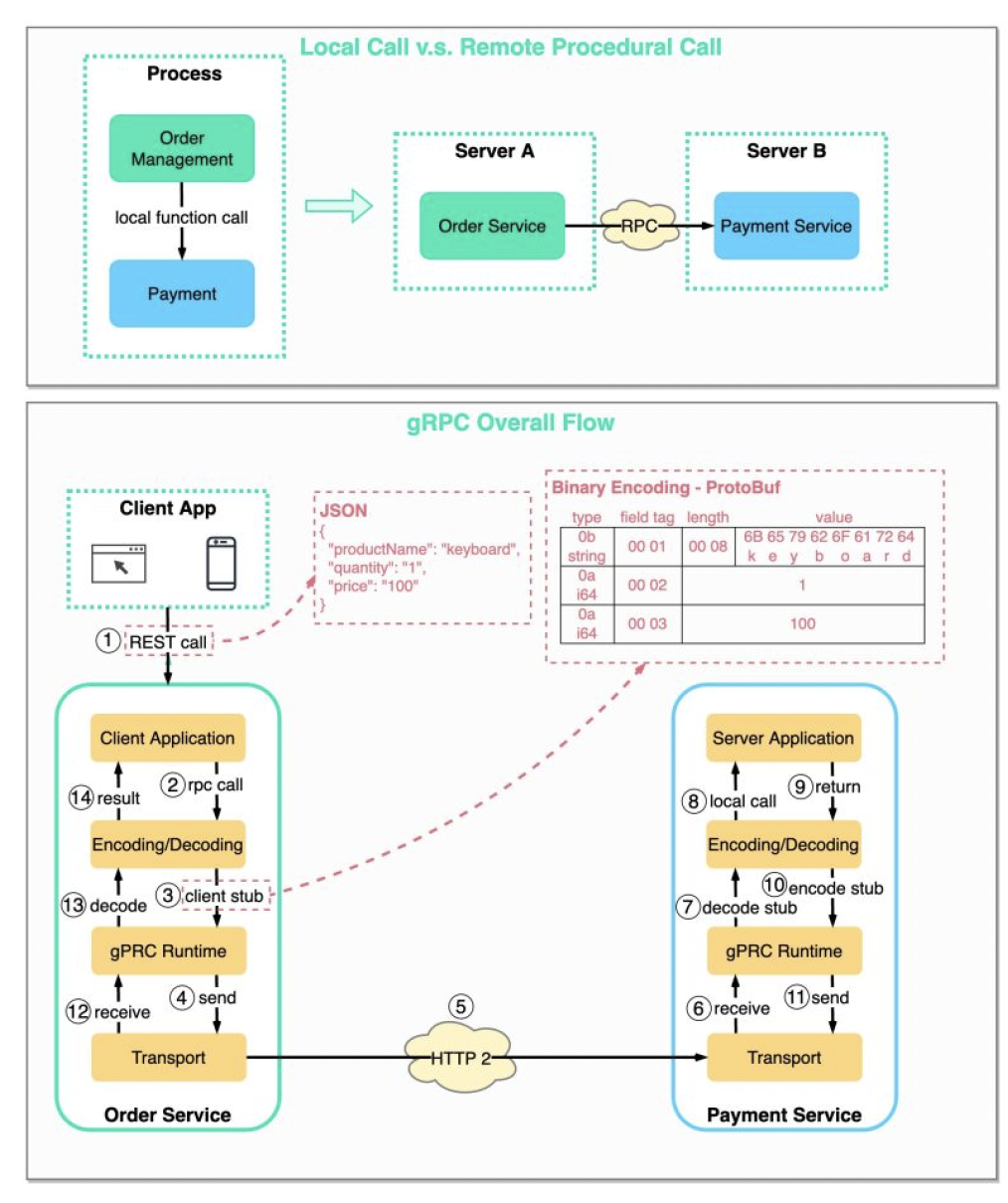

gRPC call workflow from bytebyte go!

gRPC implements RPC semantics on top of HTTP/2 and protobuf. The basic development and runtime flow:

Define service and messages

Developers write a .proto file that declares services and RPC methods, with request and response message types.

Example (conceptual):

service UserService { rpc GetUser(GetUserRequest) returns (GetUserResponse); }

Generate code

Code generation tools (protoc and language-specific plugins) create client and server stubs, data classes, and serializers/deserializers.

Implement server logic

The server implements the service interface generated from the .proto definition and registers it with the gRPC runtime.

Create a client

The generated client stub exposes strongly typed methods that marshal requests into protobuf and send them over an HTTP/2 connection to the server.

Transport and execution

HTTP/2 enables multiplexed streams over a single TCP connection. Each RPC maps to an HTTP/2 stream.

The client encodes messages in protobuf, sends them over HTTP/2, and the server decodes, executes logic, and returns responses. Streaming RPCs open long-lived streams that exchange multiple messages.

RPC Types

Remote Procedure Call (RPC) types define how procedures on a remote system are invoked and how data and control flow are managed; common types include synchronous RPC, where the caller blocks until the callee returns a result, suitable for straightforward request-response interactions; asynchronous RPC, which allows the caller to continue processing and receive the result later via callback, polling, or message delivery, useful for latency-tolerant or high-throughput systems; one-way or fire-and-forget RPCs, which send a request without expecting a response, appropriate for noncritical logging or notification tasks; streaming RPCs, which support continuous or bidirectional data flows between client and server, enabling use cases such as real-time telemetry or media; and multiplexed or multiplexing RPCs, which allow multiple logical calls over a single connection to improve resource efficiency. Each type trades off complexity, latency tolerance, reliability, and resource usage, and the right choice depends on application requirements for consistency, fault tolerance, and performance.

Unary RPC: single request, single response (classic RPC).

Server streaming: client sends one request, server returns a stream of responses.

Client streaming: client sends a stream of requests, server returns a single response.

Bidirectional streaming: both sides exchange streams independently in the same RPC.

Key under-the-hood features

gRPC’s under-the-hood features center on high-performance, developer-friendly RPC mechanics: it uses Protocol Buffers for compact, strongly typed binary serialization and a schema-first workflow; HTTP/2 as the transport layer for multiplexed, bidirectional streams, header compression, and low-latency request/response patterns; pluggable authentication and encryption via TLS and token-based interceptors for secure, customizable auth; efficient flow control and backpressure handling to support large-scale, concurrent connections; client and server streaming primitives (unary, server-streaming, client-streaming, and bidirectional streaming) for flexible communication patterns; code generation for multiple languages that produces idiomatic client and server stubs, reducing boilerplate; and built-in support for deadline/timeout propagation, cancellation, and retry semantics to improve robustness in distributed systems.

HTTP/2 framing, multiplexing, flow control, and header compression (HPACK/HPACKv2).

Connection reuse across multiple RPCs and services; efficient handling of many concurrent calls.

Built-in deadline and cancellation propagation through the call stack.

Metadata/headers for contextual data and control (auth tokens, tracing IDs).

Pluggable interceptors/middleware for logging, retries, rate-limiting, and authentication.

Importance to Microservices and Other Architectural Patterns

gRPC is a high-performance, open-source RPC framework developed by Google that uses HTTP/2, Protocol Buffers (protobuf), and code generation to enable efficient, strongly-typed communication between services. Its characteristics align closely with the needs of modern distributed systems, making it particularly well-suited to microservices and several other architectural patterns. Below is a focused, practical overview of why gRPC matters and where it fits.

Key Technical Advantages

Efficient transport and multiplexing

Uses HTTP/2: supports multiplexed streams over a single TCP connection, reducing connection overhead and improving throughput for many parallel calls between services.

Lower latency and head-of-line blocking mitigation compared to HTTP/1.1.

Compact, fast serialization

Protocol Buffers: binary format that is smaller and faster to serialize/deserialize than JSON, lowering bandwidth usage and CPU cost—critical at scale.

Strongly-typed contracts and code generation

Service and message definitions in .proto files generate idiomatic client and server stubs for many languages, reducing boilerplate and ensuring compile-time type safety.

Simplifies cross-team integration and refactoring by enforcing contract-first development.

Streaming support and flexible RPC models

Supports unary RPCs, server streaming, client streaming, and bidirectional streaming. This allows efficient implementations of use cases such as event streams, real-time updates, and bulk data transfer without resorting to separate protocols.

Built-in deadlines, cancellation, and metadata

Client-side deadlines/timeouts and cancelation propagate through the call stack, improving resiliency and preventing resource leaks.

Metadata headers support tracing, authentication tokens, and contextual data.

Why gRPC fits Microservices?

gRPC fits microservices because it provides a high-performance, language-agnostic RPC framework that simplifies interservice communication with compact binary serialization (Protocol Buffers), low-latency HTTP/2 transport, and built-in support for streaming and bidirectional flows—features that reduce bandwidth and CPU overhead and enable efficient request/response and event-driven patterns across services. Its strong typing and generated client/server stubs enforce clear contracts and reduce integration errors while enabling teams to evolve APIs safely. gRPC also integrates well with service meshes and modern observability tooling, supports load balancing and deadline/timeout propagation, and scales cleanly in polyglot environments, making it a pragmatic choice for building resilient, performant microservice architectures.

High-performance inter-service communication

Microservices often require many small, frequent calls among services. gRPC’s low latency and compact messages reduce network and CPU overhead compared with JSON over REST.

Strong contracts for polyglot environments

Enterprises often run services in multiple languages. gRPC’s code generation ensures consistent APIs and message shapes across language boundaries.

Easier API evolution and backward compatibility

Protobuf supports optional fields and reserved tags, enabling safe, versioned evolution of service contracts without breaking consumers.

Observability and control

gRPC’s integration points for metadata and interceptors make it straightforward to implement consistent tracing, metrics, and auth patterns across services.

Better support for streaming and long-lived connections

Use cases like server push for notifications, telemetry, or real-time collaboration are more natural with gRPC streams than repeatedly polling REST endpoints.

Architectural Patterns where gRPC Excels

Microservices (synchronous RPC-heavy architectures)

gRPC is a strong choice where services communicate with low latency and high throughput and where strong typing and client stubs reduce development friction.

Service mesh integration

Works well with service meshes (e.g., Envoy, Istio) that support HTTP/2 and gRPC-aware load balancing, observability, and policy enforcement.

Backend-for-Frontend (BFF)

Use gRPC for internal BFF-to-backend calls to aggregate data efficiently, while exposing REST/GraphQL to public clients if necessary.

Real-time and streaming systems

Bidirectional streaming supports chat, live telemetry, multiplayer game state sync, or real-time analytics pipelines without adding separate protocols.

Polyglot microservices and cross-platform clients

Strong code generation is useful when backend services are implemented in different languages or when native clients (mobile, desktop) need efficient, typed access.

Edge and IoT gateways

Compact messages reduce bandwidth for constrained networks; streaming and efficient multiplexing help with intermittent connectivity and many small messages.

When to Prefer Alternatives

Public-facing HTTP+JSON APIs

For broad public consumption by web browsers and third-party developers, JSON/REST or GraphQL may be better due to ubiquity, human-readability, and native browser support.

Simpler or document-centric interactions

If APIs are primarily document exchanges, REST with JSON may suffice and lower complexity around tooling and developer onboarding.

Environments where HTTP/2 or binary protocols are restricted

Some legacy networks, proxies, or corporate environments may not fully support HTTP/2 or may inspect/require JSON; in those contexts, REST or GraphQL may be more practical.

Operational Considerations

Observability and tooling

Instrument gRPC endpoints with distributed tracing, structured metrics, and logging. Use interceptors to standardize auth, tracing propagation, and metrics.

Load balancing and retries

Prefer gRPC-aware load balancers and client-side retry policies that understand idempotency and deadlines. Ensure retry logic respects deadlines and avoids amplifying load.

Final Thoughts

gRPC and microservices together form a powerful foundation for building scalable, high-performance distributed systems—gRPC delivers efficient, strongly typed communication and language interoperability, while a microservices architecture enables independent deployment, clearer ownership, and better fault isolation. For teams evaluating the approach, start by identifying a small, noncritical domain to decompose into services, define precise protobuf contracts, and set up a CI/CD pipeline with automated integration and contract tests; include observability from the outset with metrics, distributed tracing, and centralized logging, and consider a service mesh or API gateway for traffic management and security as your ecosystem grows. Investing time in clear service boundaries, versioning strategy, and developer tooling will pay off in reduced coupling and faster iteration; if you’d like help planning a migration, designing contracts, or building a proof of concept, A.M. Tech Consulting can guide you through architecture reviews, implementation best practices, and operational readiness to get your team productive quickly.